How Postman saves the support from writing code

Postman is a convenient tool that can describe and execute requests, receive information about their statuses, build chains of requests, loop them, and create scripts. The main advantage is that you almost do not need to write any code at all.

Some time ago, we already talked about the technology by which we put products on monitoring. At the top level, the plan is as follows:

- Create a map of user scenarios: describing, step by step, all the actions that users perform in the product.

- Go through the interface pointing out the requests that are behind every user's action.

- Preparing scripts that will simulate user actions by executing request chains.

Postman is responsible for the last step in this process, and even an engineer with zero experience and no knowledge of the code can actually use it. We have checked: in order to learn how to make scripts in this program, one only needs a couple of hours. After that, the support specialist can independently prepare new requests, schedule them, and monitor the results.

How to work with Postman

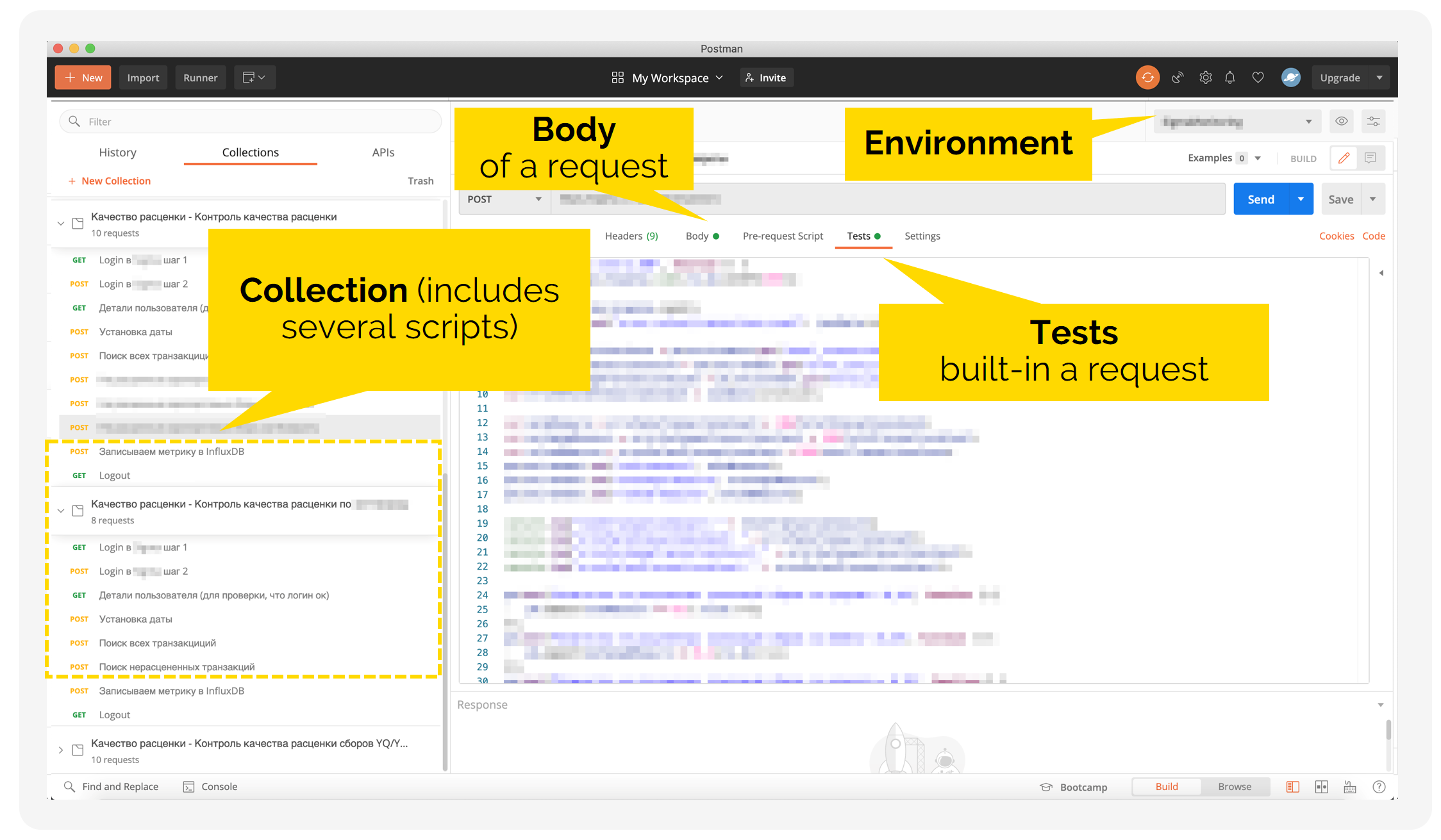

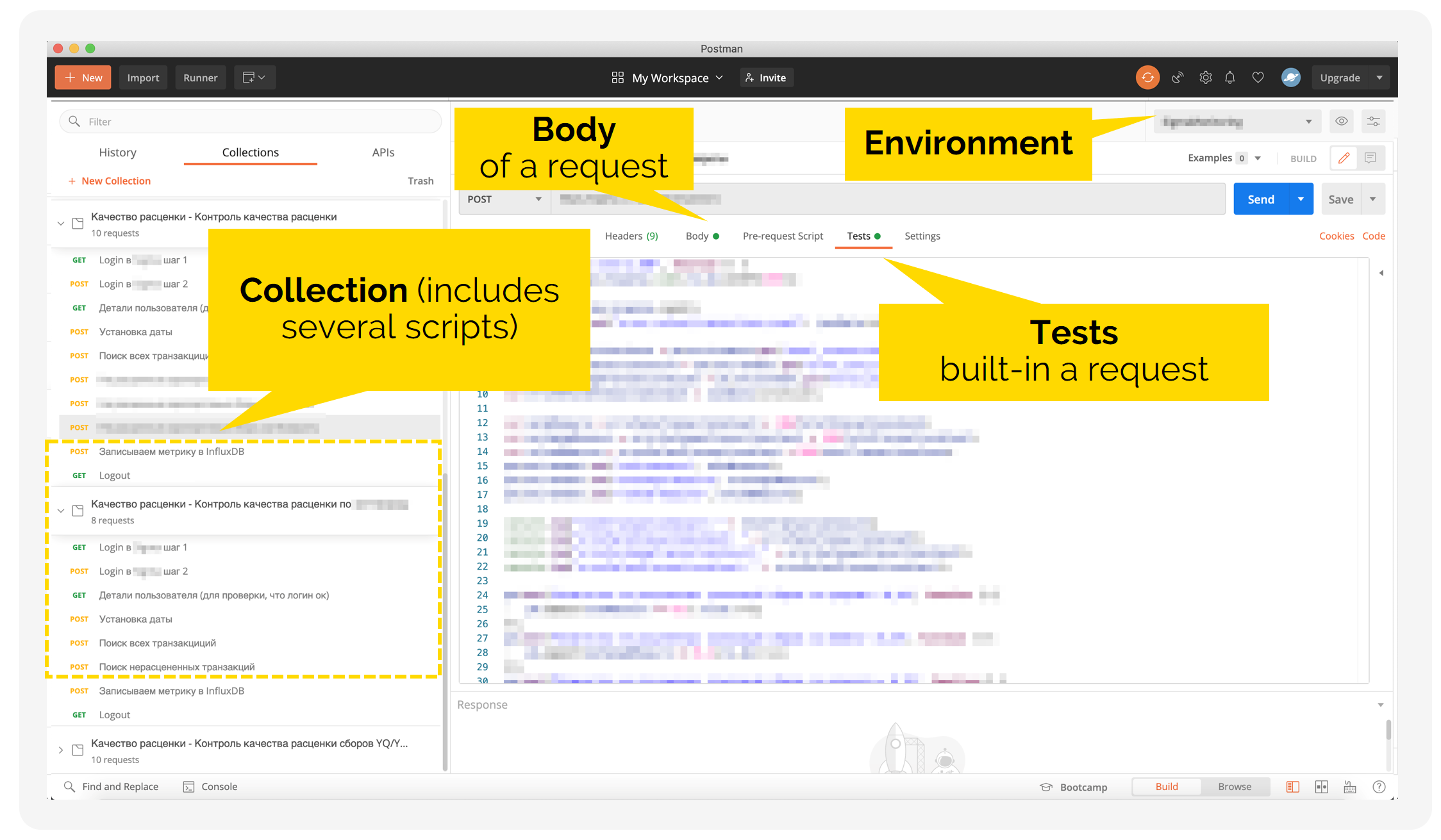

So, you have made a decision on the scenarios to be monitored, and prepared a list of requests that correspond to user actions. In Postman, scripts are collected from these requests, as from a construction toy. In addition to requests, the collections and environments play the part of the building pieces.

- Environments contain the values of variables that we work with in scripts – server addresses, folder names, etc. In fact, one environment is one product.

- The collection describes what to do with these variables. This is a set of requests and, in our case, one collection is one monitoring scenario. The same collection can be used in different environments, substituting the necessary variables. You do not need to write a new authorization script for each system – you can call a ready-made collection with one click.

Thus, the plan is as follows: write a request, save it to the collection, select the desired environment and go! If necessary, you can immediately add tests to be performed in each request or at each monitoring step. Our task is to make sure that the system checks the modules performance on a scheduled basis, so in this case, we did without additional tests.

How we built Postman into our process

We use Postman to develop monitoring scenarios, testing, and integration interaction.

- The scripts are exported to JSON files and saved to the TFS Git repository.

- In the TFS pipeline, a scheduled check is prescribed. It is worth noting that, for us, integration with TFS is another advantage of Postman, since all our teams have been working with it starting this year. In TFS, one can immediately see the result of executed requests, which is very convenient if Postman is used for debugging a product. As we are talking about monitoring here, we will not be going any deeper.

- The JSON file is called via the Newman utility, a tool for running collections from the command prompt, which was also developed by the Postman team. It can execute requests, accept responses to them, and calculate metrics that are specified in the script.

- The results of the scripts are saved in the BD Influx, from which the data is sent to the Grafana dashboards. Here one can set up critical thresholds to automatically report if an indicator goes beyond the specified value.

Summary

When we were working on the monitoring technology, we planned to use Zabbix. On closer inspection, it turned out that it is not suitable for our purposes – it is impossible to write a script with native tools, and we had to prepare them in Python. Without such a tool as Postman collections, you have to do it again every time.

Here, all the logic is built into the interface. So it is enough for an engineer to substitute the necessary data in separate sections of the code. This significantly reduces the entry threshold for anyone who needs to work with requests; whether they are developers, analysts, testers, or anyone else.

The result is that support becomes more reliable, regardless of how fluent a particular engineer is in the code. At the same time, it is easy to transfer experience from team to team and there are no compromises in terms of the quality of processes.