Serverless computing and Function-as-a-Service

Serverless computing, and Function-as-a-Service solutions based on it help developers evolve products taking business features into consideration. We experimented with these technologies and came to the conclusion that the existing solutions are a bit raw to be used in the field. Let's cover the topic step-by-step.

The ‘serverless computing’ term is kind of misleading — obviously, servers remain in the foundation of the product, yet the developers don't have to take care of them. At its core, Serverless carries on with the same virtualization ideas as the earlier aaS technologies: allowing the team to focus on the feature's code and development. If IaaS is an abstraction of hardware, containers are an abstraction of apps, then FaaS is an abstraction of the service's business logic.

The idea is not to pack the app server, database, and load balancing means, into the container. Developers can isolate the function in the code, upload it to a cloud platform, and run it when required. Provision of instances, code deployment, resource allocation, web interface launch, performance monitoring, maintaining security — all these happen automatically.

FaaS provides maximum flexibility in performance management — during downtime the function doesn't consume resources at all; and if necessary, the platform quickly allocates capacities sufficient for virtually any workload. Whether the app serves one user or a hundred thousand at once — performance of a system with FaaS architecture isn't really diminished, while a product with the traditional architecture would certainly suffer issues.

The team doesn't have to worry about the backend and deployment processes. Ideally, implementation of a new feature is reduced to uploading one function to the server. As a result, development proceeds faster, Time-to-Market is moving downwards. In a company as a whole, introduction of FaaS helps develop a platform approach — Serverless computing requires either a pool of cloud resources from a provider, or a Kubernetes cluster.

How it works in reality

The market already offers a whole bunch of Serverless platforms. We examined two solutions: Lambda from Amazon and KNative. The first is a proprietary service to work within the Amazon cloud and the second one operates on Kubernetes.

Amazon Lambda is a field-ready option with all the capabilities mentioned above. The platform performs all the routine product operations, deploys apps, monitors the performance of instance groups and provides fault tolerance and scaling.

The main disadvantage is that it is a proprietary product, which means one is tied to the Amazon cloud and has to use other products within their ecosystem. Should you decide to change the platform, you will most certainly have to rebuild the product significantly as the rules can be significantly different in the new infrastructure.

KNative is a solution more appealing to us since it works over Kubernetes.

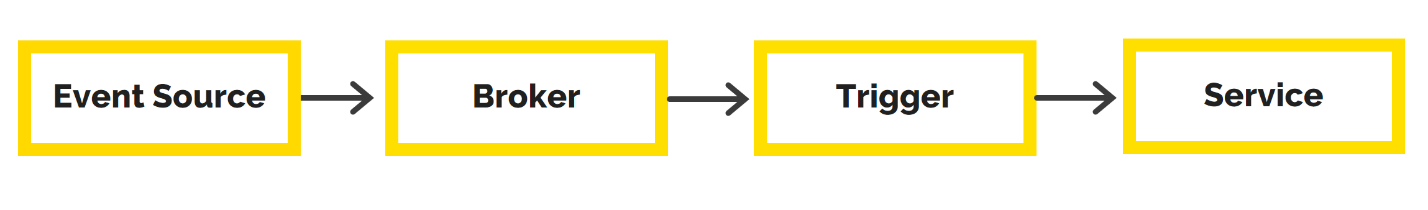

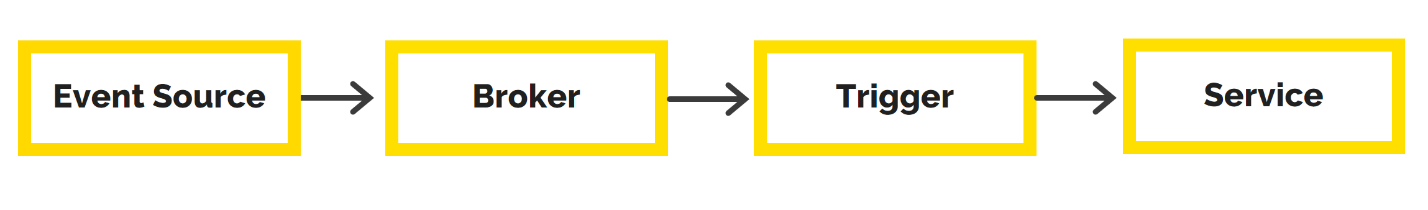

Unlike Lambda, it uses your own platform, hence you have to dive deeper into the process’ architecture. Here’s what it looks like:

- Event source – a FaaS platform entity that interacts with external event sources. It can be triggered with an HTTP request, a message from a message broker, or the platform's own event.

- Broker – a basket that receives and stores event information from the Event Source. The broker can be a Kafka module, it can work in RAM, etc.

- Trigger is a Broker-subscribed component that retrieves messages from the basket and sends them to the Service for execution.

- Service is a working function, an isolated business logic.

From the developer's standpoint, the process looks almost the same as the already familiar containerized apps; only the object changes: (1) write a function, (2) pack it into a Docker image, (3) upload it.

KNative's main drawback is that there are no logging nor monitoring means, and these are critically important for FaaS solutions. If a product is split into functions, without effective monitoring and logging, one cannot establish the source of the failure promptly — they'd have to examine each function separately.

FaaS advantages

The approach proves itself effective when there's no need for instant responses to the user, and when the workload can fluctuate from 0 to 100%:

- Tasks running on schedule. Export/import operations in financial reporting systems, accounting systems, and solutions for creating backup copies.

- Asynchronous user notification sending (push, email, СМС).

- Machine learning, the Internet of Things, AI systems — all of these spheres will certainly appreciate such features. Serverless enables calculations to be made closer to the endpoint, i.e. the user. This means that the product has less latency, so the data transfer load will be lower.

Drawbacks of Serverless:

1. This architecture is not well suited for long-run processes. If a function is used in an app almost permanently, the resource consumption will be equal to traditional products.

2. Currently, the best platforms bind a company to a cloud provider — whether it's AWS, Microsoft Azure, or Google Cloud. Kubernetes solutions have yet to have grown to this level.

3. FaaS isn't a magic pill that allows developers to forget about the infrastructure or to just submit features to production. One still has to plan the architecture, design the functions and their interactions via DDD. Otherwise, the product turns into a pile of strongly interconnected functions that are challenging to understand. Developers won't be able to deploy such features and amend them individually. In the worst-case scenario, while processing the user requests, they'll have to address all functions.

Our conclusion – the Serverless age is yet to come in a few years

...Given that developers will keep the technology evolving, in particular - evolving open source platforms up to the level of Amazon Lambda is inevitable.

Such projects may be motivated by reducing costs in energy-intensive product management. At this point, it may be easier for a developer to work the old-fashioned way. Serverless expertise and wielding the tools is a useful skill, but companies should wait a couple of years before using them in the field.