How we use facial recognition to find test phones

True Engineering is engaged in a large number of projects related to mobile development. This requires a set of devices for testing at each and every stage. Not only do mobile developers have a need for smartphones, but so too do testers, designers, and PMs. To determine the phone’s precise location, we use an online employee facial recognition database. In this article, we will cover the way its implementation.

We decided to automate the task of finding the right device. The first step was to write a mobile application that could detect and report its specific location in a room via Wi-Fi hotspots. Furthermore, we have taught smartphones to report to the server on the OS version and show display the battery charge is.

We installed the app on the test devices and worked on them for several months. It turned out to be a convenient option, though not a perfect one. Devices’ batteries would be a drain, devices would simply switch off, Wi-Fi hotspots would move from one place to another, and all the actual geolocation would tell you is that the device is in the office.

The way we created the mobile app

We came up with the concept of a system that would recognize employees by their faces and test devices according to special markers, would request confirmation of the changed device’s status, and then would make changes to the online database, which any employee would be able to view without having to get up from their chair.

Facial Recognition

As of 2018, facial recognition is, for the most part, an accomplished technology. Therefore, we did not reinvent the wheel and try and introduce models of our own, but instead used a ready-made solution. The most convenient option seemed to be the face recognition module since it does not require additional training and works very quickly even without acceleration on the GPU.

With the help of face_locations, we could detect employees' faces in photos while face_encodings was used to extract the features of a specific employee's face.

The collected data was accumulated in the database. To identify a specific employee, the face_distance function was used to read the "difference" between the encoding of the detected employee and the encodings from the database.

Factually, at this stage, we had the ability to take another step further and create a classifier, for instance, based on KNN, so that the system would be less sensitive to the dynamics of employees' faces. However, in practice, that turned out to be much more time-consuming. And the ordinary averaging of the human face encoding between the one that is now currently in the database and the one that the system found for the changed device’s status was already enough to avoid accumulating errors in practice.

So, is it all in the bag now?

It would seem that we have learned to recognize devices and faces and so the job is done. What more could it possibly take?

In reality, our work has just begun. Now our focus is to make all the system’s components work stably and efficiently "on the battlefield". We must optimize the server resource overhead in idle, to think over Use Cases and understand the way it’s supposed to even look graphically.

Interface

Perhaps the most important feature in such systems’ development is the interface. Some may debate this view, but the user is the central element in such a situation. The front end part can be implemented as quickly as possible with the help of Tkinter.

A few remarks on Tkinter:

- Pay attention to which units set the indentation/element dimensions (relative or absolute)

- Remember that relative and absolute units can be used together (their values are simply added together)

- Parameter

.attributes("-fullscreen", True)allows us to devise a single-window application that switches to full-screen mode, so as to prevent elements of the system interface from confusing users.

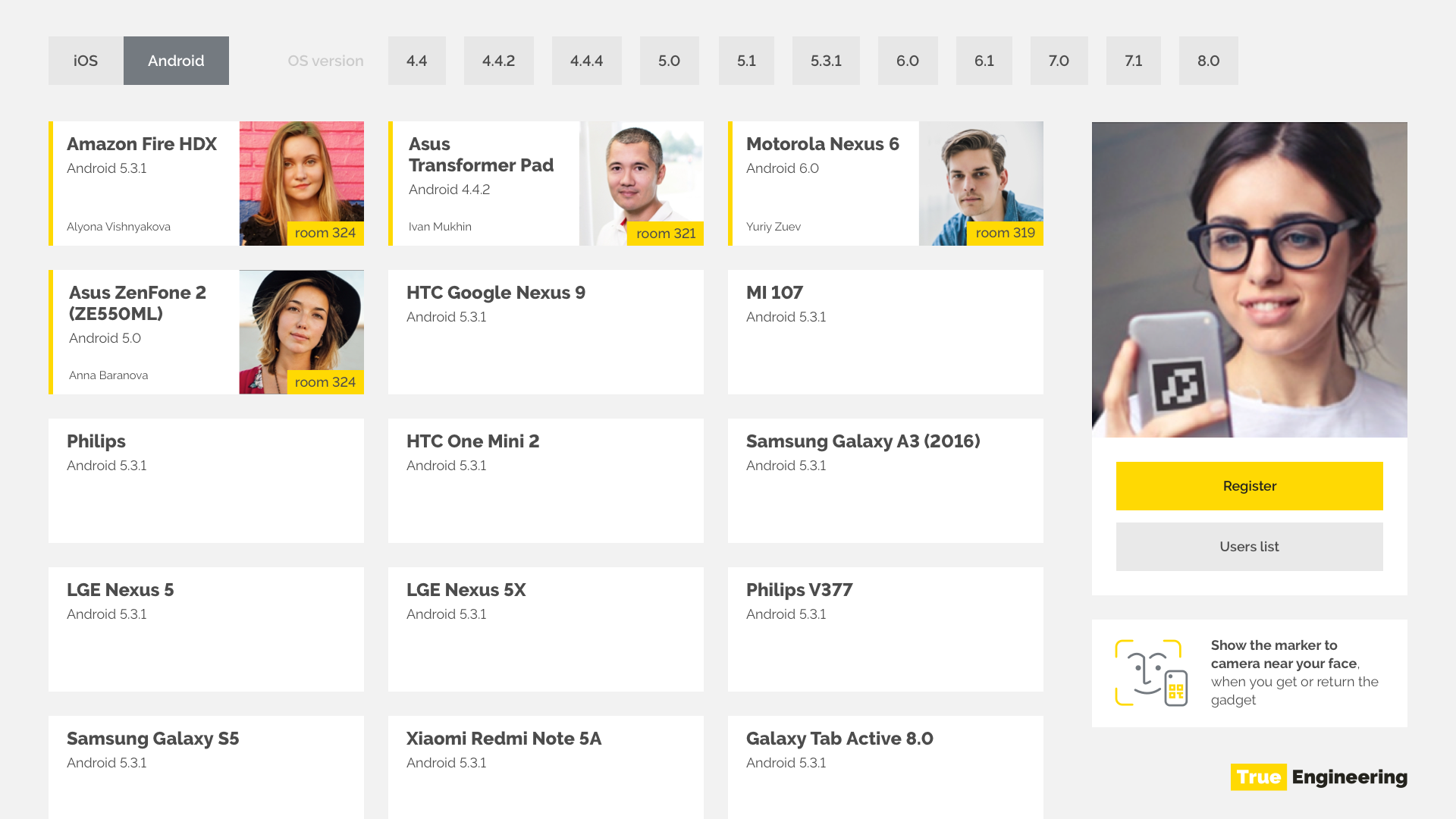

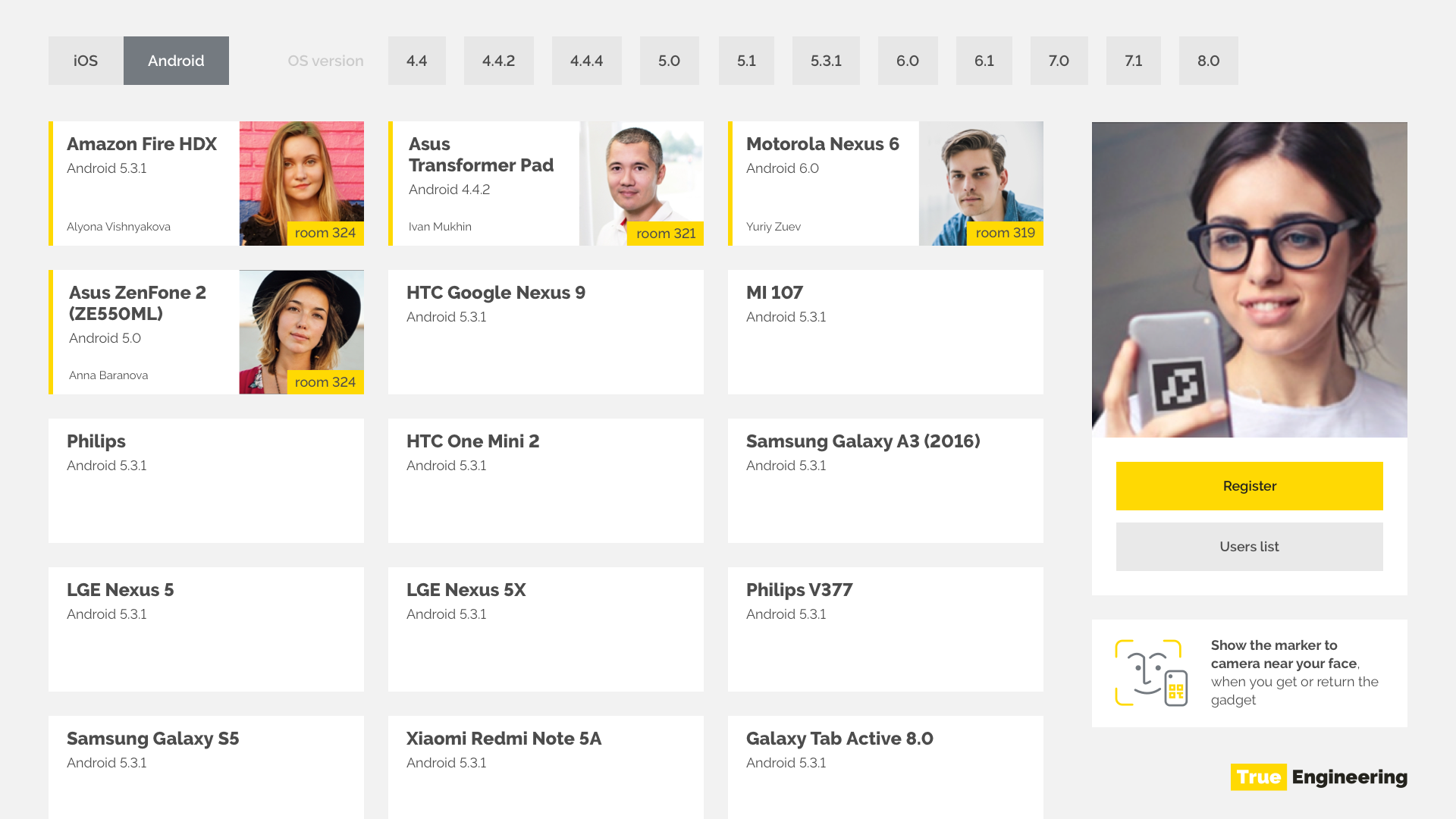

The interface consists of cards with information on the device and the user currently using the device. Most of the screen is occupied by the card directory which is the main accounting tool. At the top, there is a filter that allows us to filter the directory by platform or operating system version.

Here are the key components of the interface:

- Device

The screen displays device cards with the version of the operating system, the name and ID of the device, as well as the user to whom the device is currently registered. - Photofixation

To the right is a control unit where the image is displayed from the webcam, as well as buttons for registration and personal information editing.

The image is displayed in order to provide feedback to the user: you definitely detect the device’s marker by the screen. - OS version selection

We made a list with the choice of the OS version of interest, because often times it’s not a specific device that testing needs, but a specific Android or iOS version. The version filter is made to be horizontal to save space and render the version list available without scrolling on a single screen.

Optimization

One run of any system component does not take too much time. However, if you run the marker and face recognition at the same time, the attempt to recognize all 30 frames per second that the camera provides will completely exhaust the non-GPU computer’s resources.

It is clear that 99% of the time the system would be running this work idle.

To prevent this, the following optimization decisions were made:

- Only every eighth frame gets processed. The system response delay increases to about 8/30 of a second, with the human response time at about one second. Accordingly, the user would still not be able to notice such a delay. And we have already reduced the load on the system by eight times.

- First, the device searches for the device marker in the frame, and only when it is detected does facial recognition start running. Since searching for markers in a frame is about 300 times less expensive than searching for faces, we decided that in standby mode we would only check for the presence of a marker.

- To reduce "stuttering" in searching for faces, while there are no faces in the image, the number_of_time_to_upsample parameter has been disabled in the face_locations function.

face_locations = face_recognition.face_locations(rgb_small_frame, number_of_times_to_upsample=0)

For this reason, the processing time of a frame with no faces in it is equal to the processing time of a frame where faces are easily detected.

So what do we end up with?

At the moment, the system is successfully deployed on the MacMini Late 2009 that we obtained, on Core 2 Duo. As a part of the testing, it worked quite successfully even on a single virtual core with 1024 megabytes of RAM and 4 gigabytes of ROM in a Docker container. A touch-screen display was connected to the MacMini to make its appearance look minimalistic.

Even users who did not use the old board now register new devices, and cases of unsuccessful searches have become much less common!

What's next?

In the current system of course there are still many things that could be improved and would be desirable to improve:

Ensuring that the OS controls do not appear along with dialog boxes (now it is messagebox from the Tkinter package):

- Sending calculations and requests to the server to different threads with interface processing (now they are executed in the main thread, Tkinter's main loop, which freezes the interface while requests are being sent to the online database).

- Matching the interface to the same design as other corporate resources.

- Devising a full-fledged web interface for remote data viewing.

- Using voice recognition to confirm/cancel an action and fill in text fields.

- Implementing registration of multiple devices at the same time.