How tokenization simplifies the work on sensitive data

Today we will talk about information security, focusing on the purpose of converting personal data into non-personal data and the way it is done.

There's no need to tell modern companies how complicated it is to work with personal data and ensure the correct storage of such information, as well as what serious threats even small incidents can provoke, let alone leak scandals. Also, there are some less obvious problems. For example, if a team creates a system that works with personal data, the product will have to be tested somehow. Then it is better to take unreal data, modify, mask, and anonymize it.

Developers have a whole range of technologies for different scenarios of working with personal data. The information is encrypted to be securely transmitted. The numbers are automatically hidden with a mask to prevent the call center operator from seeing the specific of the client's account. But we’d like to tell you about tokenization — a technology that was recently introduced in one of our projects.

What tokenization is

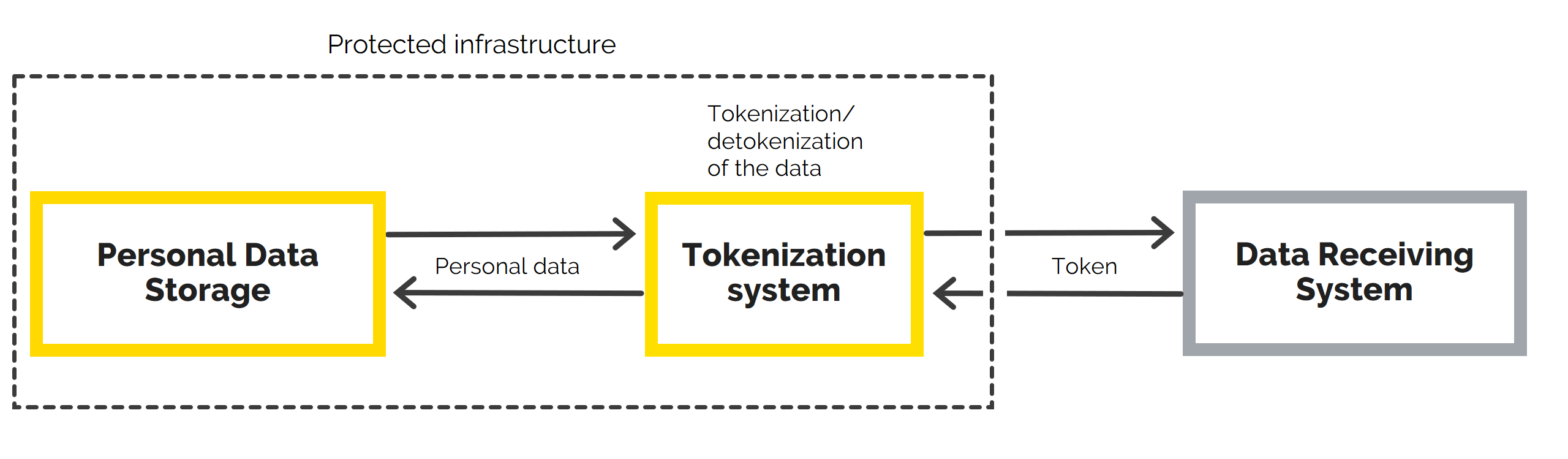

A token is an entity that replaces data in exchange processes between information systems. The tokenization system serves as a layer between the protected base and the data receiving systems. It assigns to the data, that corresponds to a certain position in the DB, a kind of identifier — a token.

Only tokens are transmitted to external systems. So, in case of interception, the actual user data will remain unknown by an attacker. When a tokenization system receives a response to a request, it conducts a reverse operation and returns the result with the actual data to the working system.

The nature of the task

Our customer had to comply with the requirements of the legislation, which obliges Russian companies to process and store personal data of Russians on the territory of the Russian Federation. The difficulty is that our client interacts with the international information system, so we were required to build a process that would not interfere with business processes and also would not violate the law.

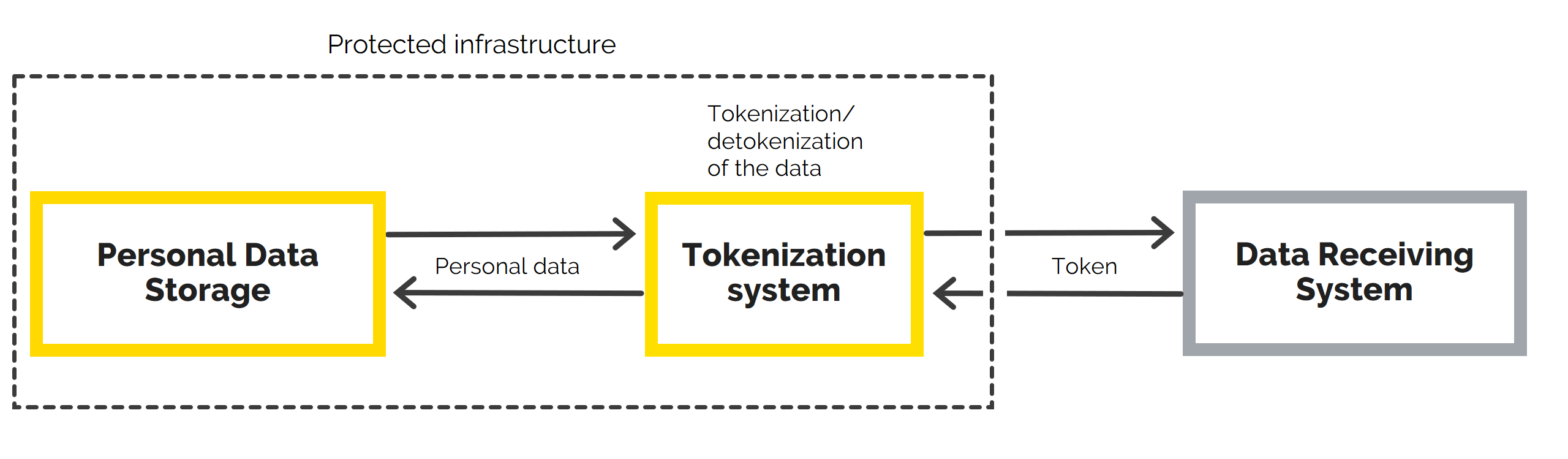

This is where we deemed it necessary to apply tokenization. Our service includes storage and data conversion tools, and the customer's working systems are integrated with it. Employees of the company see actual user data on the UI; meanwhile, it comes to the external system in a converted form. The converted data is also stored in the external DB. The tokenizer requests it, returns into the original state, and stores the result in the customer's storage.

It means that actual personal information doesn’t leave the Russian server. At the same time, from the point of view of business processes, the data of each user in the foreign system is stored and processed with absolute accuracy.

Such a scheme also allows the customer’s staff to fill in the customer’s data in Russian because now we are independent of the requirements of an international system that understands only Roman characters.

Other cases when it can be useful

Tokenization can be used in all cases when you need to process private data. Medical information, personnel records, emails and messages—tokens will help a company to transfer such kinds of data to a different location while maintaining security.

For online stores and any other online sites that sell something, tokenization provides insurance against risks due to the processing of payment data. Sensitive information is stored in a closed database at the provider, and tokens are used in shopping. The provider receives a token, conducts a transaction within its infrastructure, and returns the result (the money is written off, and the goods can be sold).

Banks, payment systems, and financial institutions use tokens so that customers don’t have to change card data manually at the re-launch. In this case, the new card is automatically attached to the already saved token. Tokenization also makes it easier to comply with legal requirements and industry standards such as PCI DSS.

Differences between tokenization and encryption

A logical question is why create an additional service if the information can be encrypted? The key is that tokenization works easier; the company doesn’t have to convert the entire amount of personal data because it is stored in its original form inside a protected circuit. Moreover, it takes much less computing power to create tokens than to encrypt and decrypt information. Besides, it’s much easier to scale with tokens, while in the case of encryption, resource requirements will grow much faster.

Finally, encrypted data must be stored in-house, while tokenization allows the company to use an external provider. As a result, cybercriminals will not attack such a company; a business can entrust its security to professionals.